Works Cited

Adam and Viprey 2009 Adam, J.-M. and Viprey,

J.-M. “Corpus de textes, textes en corpus. Problématique et

présentation”. Corpus 8:5–25.

Anttila and Heuser 2016 Anttila, A. and

Heuser, R. “Phonological and Metrical Variation across

Genres”. Proceedings of the Annual Meetings on

Phonology. Linguistic Society of America, Washington, DC

(2016).

Apollinaire 1994 Apollinaire, G. Œuvres Poétiques, M. Adéma et M. Décaudin eds.,

Bibliothèque de la Pléiade, Gallimard, Paris, France (1994).

Apollinaire 2005 Apollinaire, G. Lettres à Madeleine. Tendre Comme Le Souvenir,

Gallimard, Paris, France (2005).

Bacciu et al. 2019 Bacciu, A., La Morgia, M., Mei,

A., Nerio Nemmi, E., Neri, V. and Stefa, J. “Cross-domain

Authorship Attribution Combining Instance Based and Profile Based

Features”. In: Cappellato, L., Ferro, N., Losada, D. E. and Müller,

H. (eds.). CLEF 2019 Labs and Workshops, Notebook

Paper. (2019).

Baird 2004 Baird, D. Thing

Knowledge: A Philosophy of Scientific Instruments, University of

California Press, Berkeley (2004).

Bal 2017 Bal, M. Narratology:

Introduction to the Theory of Narrative, Fourth Edition., University

of Toronto Press, Toronto Buffalo London (2017).

Beaudouin 2002 Beaudouin, V. Mètre et Rythme Du Vers Classique. Corneille et

Racine, Honoré Champion, Paris (2002).

Berger and Luckmann 1967 Berger, P. and

Luckmann, T. The Social Construction of Reality: A Treatise

in the Sociology of Knowledge, Doubleday, Garden City, NY

(1967).

Bhatia et al. 2018 Bhatia, S., Lau, J.H. and

Baldwin, T. “Topic Intrusion for Automatic Topic Model

Evaluation”. Proceedings of the 2018 Conference

on Empirical Methods in Natural Language Processing. Association for

Computational Linguistics, Brussels, Belgium (2018), pp. 844–849.

Binder and Jennings 2014 Binder, J.M. and

Jennings, C. “Visibility and meaning in topic models and

18th-century subject indexes”.

Literary and

Linguistic Computing 29(3):405–411. doi:

https://doi.org/10.1093/llc/fqu017 Birnbaum and Thorsen 2015 Birnbaum, D.J.

and Thorsen, E. “Markup and meter: Using XML tools to teach

a computer to think about versification”.

Proceedings of Balisage: The Markup Conference. (2015).

doi:10.4242/BalisageVol15.Birnbaum01 Bobenhausen and Hammerich 2015 Bobenhausen, K. and Hammerich, B. “Métrique littéraire,

métrique linguistique et métrique algorithmique de l’allemand mises en jeu

dans le programme Metricalizer”.

Langages 199(3):67–88. doi:

https://doi.org/doi:10.3917/lang.199.0067 Bode 2018 Bode, K. A World of

Fiction: Digital Collections and the Future of Literary History,

University of Michigan Press., (2018).

Bories 2020 Bories, A.-S. Des Chiffres et Des

Mètres. La Versification de Raymond Queneau. Honoré

Champion, Paris, France (2020).

Bories et al. 2021 Bories, A.-S., Purnelle, G. and

Marchal, H. Plotting Poetry: On Mechanically-Enhanced

Reading, Presses Universitaires de Liège, Liège (2021).

Bories et al. 2023 Bories, A.-S., Plecháč, P.

and Ruiz Fabo, P. Computational Stylistics in Poetry,

Prose, and Drama, De Gruyter (2023).

Brunet 1989 Brunet, É. “Hyperbase: Logiciel Documentaire et Statistique pour l’Exploitation des

Grands Corpus”. Tools for humanists, p.

33–36.

Bubenhofer and Dreesen 2018 Bubenhofer, N. and Dreesen, P. “Linguistik als antifragile

Disziplin? Optionen in der digitalen Transformation”.

Digital Classics Online, pp. 63–75. doi:

https://doi.org/10.11588/dco.2017.0.48493 Buchanan n.d. Buchanan, J. “Collaborating With the Dead: Revivifying Frank Benson’s Richard

III”. Booklet published by Silents Now.

Burrows 2002 Burrows, J. “‘Delta:’ a Measure of Stylistic Difference and a Guide to Likely

Authorship”.

Literary and Linguistic

Computing 17(3):267–287. doi:

https://doi.org/10.1093/llc/17.3.267 Calzolari et al. 2012 Calzolari, N., Del

Gratta, R., Francopoulo, G., Mariani, J., Rubino, F., Russo, I. and Soria, C.

“The LRE Map. Harmonising Community Descriptions of

Resources”. Proceedings of LREC 2012, Eighth

International Conference on Language Resources and Evaluation.

Istanbul, Turkey (2012), pp. 1084–1089.

Daelemans et al. 2019 Daelemans, W., Kestemont,

M., Manjavacas, E., Potthast, M., Rangel, F., Rosso, P., Specht, G., Stamatatos,

E., Stein, B., Tschuggnall, M., Wiegmann, M. and Zangerle, E. “Overview of PAN 2019: Bots and Gender Profiling, Celebrity

Profiling, Cross-Domain Authorship Attribution and Style Change

Detection”. In: Crestani, F., Braschler, M., Savoy, J., Rauber, A.,

Müller, H., Losada, D. E., Heinatz Bürki, G., Cappellato, L. and Ferro, N.

(eds.). Experimental IR Meets Multilinguality,

Multimodality, and Interaction. Springer International Publishing,

Cham (2019), pp. 402–416.

Debon 2008 Debon, C. ‘Calligrammes’ Dans Tous Ses États - Édition Critique Du Recueil de

Guillaume APOLLINAIRE, éditions Calliopées, Paris (2008).

Descartes 1959 Descartes, R. Règles pour la Direction de l’Esprit (3rd Ed.; J.

Sirven, Ed.). Brin, Paris (1959).

Du 2019 Du, K. “A Survey On LDA

Topic Modeling In Digital Humanities”. Proceedings of the 2019 Digital Humanities Conference. Utrecht

(2019).

Décaudin 1969 Décaudin, M. Le Dossier d’alcools, Droz, Paris (1969).

Eder 2015 Eder, M. “Does Size

Matter? Authorship Attribution, Small Samples, Big Problem”.

Literary and Linguistic Computing 30(2):167–182. doi:

https://doi.org/10.1093/llc/fqt066 Eder 2017 Eder, M. “Visualization in Stylometry: Cluster Analysis Using Networks”.

Digital Scholarship in the Humanities

32(1):50–64. doi:

https://doi.org/10.1093/llc/fqv061 Eder et al. 2016 Eder, M., Rybicki, J. and

Kestemont, M. “Stylometry with R: A Package for

Computational Text Analysis”. The R

Journal 8(1):107–121.

Erlin et al. 2021 Erlin, M., Piper, A. Knox, D.,

Pentecost, S., Drouillard, M., Powell, B. and Townson, C. “Cultural Capitals: Modeling Minor European Literature”.

Journal of Cultural Analytics 6 (1).

https://doi.org/10.22148/001c.21182 Evert et al. 2017 Evert, S., Proisl, T., Jannidis,

F., Reger, I., Pielström, S., Schöch, C. and Vitt, T. “Understanding and Explaining Delta Measures for Authorship

Attribution”.

Digital Scholarship in the

Humanities 32(suppl_2):ii4–ii16. doi:

https://doi.org/10.1093/llc/fqx023 Flanders and Jannidis 2019 Flanders, J.

and Jannidis, F. The Shape of Data in Digital Humanities:

Modeling Texts and Text-Based Resources, Routledge, Taylor and

Francis Group, London; New York (2019).

Follet 1987 Follet, L. “Apollinaire Entre Vers et Prose, de ‘L’Obituaire’ à la ‘Maison des

Morts’”,

Semen 3, février 1987.

doi:

http://semen.revues.org/5523 Frontini et al. 2017 Frontini, F., Boukhaled,

M.-A. and Ganascia, J.-G. “Mining for Characterising

Patterns in Literature Using Correspondence Analysis: an Experiment on

French Novels”. Digital Humanities

Quarterly 11(2).

Genette 1972 Genette, G. Figures III, Éditions du Seuil, Paris (1972).

Gius and Jacke 2017 Gius, E. and Jacke, J.

“The Hermeneutic Profit of Annotation: On Preventing and

Fostering Disagreement in Literary Analysis”.

International Journal of Humanities and Arts Computing

11(2):233–254. doi:

https://doi.org/10.3366/ijhac.2017.0194 Gius et al. 2019 Gius, E., Reiter, N. and Willand,

M. “Foreword to the Special Issue ‘A

Shared Task for the Digital Humanities: Annotating Narrative

Levels’”.

Journal of Cultural

Analytics. doi:

https://doi.org/10.22148/16.047 Guiraud 1953 Guiraud, P. Index Des Mots d’Alcools de G. Apollinaire (Index Du Vocabulaire Du

Symbolisme. 1), Klincksieck, Paris (1953).

Hayles 2012 Hayles, N. K. How

We Think: Digital Media and Contemporary Technogenesis. University

of Chicago Press, Chicago (2012).

Heiden et al. 2010 Heiden, S., Magué, J.-P. and

Pincemin, B. “TXM : Une plateforme logicielle open-source

pour la textométrie - conception et développement”. JADT 2010. (2010), pp. 1021–1032.

Henny et al. 2018 Henny, U., Betz, K., Schlör, D.

and Hotho, A. “Alternative Gattungstheorien. Das

Prototypenmodell am Beispiel hispanoamerikanischer Romane”. Proceedings of the DHd 2018 Conference. Cologne

(2018).

Herrmann 2017 Herrmann, J.B. “In a Test Bed with Kafka. Introducing a Mixed-Method Approach

to Digital Stylistics”. Digital Humanities

Quarterly 011(4).

Herrmann and Lauer 2018 Herrmann, J.B. and

Lauer, G. “Korpusliteraturwissenschaft. Zur Konzeption und

Praxis am Beispiel eines Korpus zur literarischen Moderne”. Osnabrücker Beiträge zur Sprachtheorie (OBST)

92:127–156.

Herrmann et al. 2019 Herrmann, J.B., Woll, K.

and Dorst, A.G. “Linguistic Metaphor Identification in

German”. MIPVU in Multiple Languages.

John Benjamins, Amsterdam / Philadelphia (2019).

Herrmann et al. 2021 Herrmann, J.B., Jacobs, A.

and Piper, A. “Computational Stylistics”. In D.

Kuiken and A. Jacobs (Eds.), Handbook of Empirical Literary

Studies, pp. 451-486. Berlin: De Gruyter.

Herrmann forthcoming Herrmann, J.B. Externalizations. Data-Driven Literary Studies.

Herschberg-Pierrot 2005 Herschberg-Pierrot, A. Le Style En Mouvement. Littérature

et Art, Sup/Lettres, Berlin (2005).

Herschberg-Pierrot 2006 Herschberg-Pierrot, A. “Style, Corpus et Genèse”.

Corpus 5.

Hirst and Feiguina 2007 Hirst, G. and

Feiguina, O. “Bigrams of Syntactic Labels for Authorship

Discrimination of Short Texts”.

Literary and

Linguistic Computing 22(4):405–417. doi:

https://doi.org/10.1093/llc/fqm023 Jacquot 2012 Jacquot, C. “Le

Poulpe, une Figure de la ‘Plasticité’ d’Apollinaire?”, Apollinaire 11 (2012), pp. 35–43.

Jacquot 2014 Jacquot, C. Plasticité de l’Écriture Poétique d’Apollinaire. Une Articulation du

Continu et du Discontinu. Thèse de doctorat en langue française sous

la direction de J. Dürrenmatt, Université Paris IV-Sorbonne (2014).

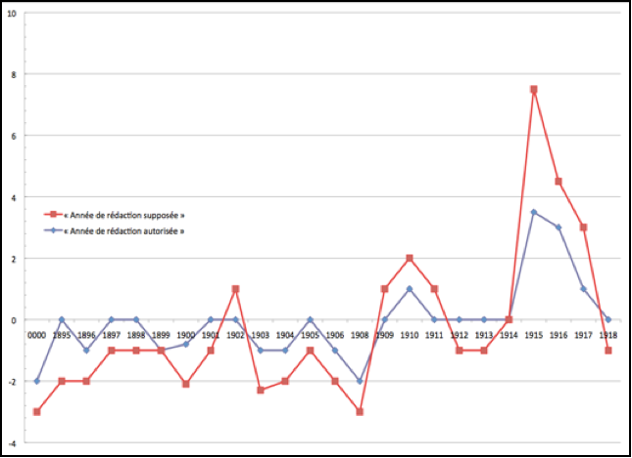

Jacquot 2017 Jacquot, C. “Corpus Poétique et Métadonnées: la Problématique de la Datation dans les

Poèmes de Guillaume Apollinaire”. Modèles et

Nombres En Poésie. Champion, Paris (2017), pp. 81–103.

Jenny 2011 Jenny, L. Le Style

En Acte. Vers Une Pragmatique Du Style, MētisPresses, Genève

(2011).

Joyce 1968 Joyce, J.A Portrait

of the Artist as a Young Man, Text, Criticism, and Notes. Edited by

Chester G. Anderson. The Viking Press, New York (1968).

Kestemont et al. 2018 Kestemont, M.,

Tschuggnall, M., Stamatatos, E., Daelemans, W., Specht, G., Stein, B. and

Potthast, M. “Overview of the Author Identification Task at

PAN-2018: Cross-Domain Authorship Attribution and Style Change

Detection”. Working Notes Papers of the CLEF

2018 Evaluation Labs. CEUR Workshop Proceedings (2018), pp.

1–25.

Kiefer 2018 Kiefer, K. “Tool

Criticism on emotional text analysis”. Proceedings of the EADH Conference. (2018).

Koolen et al. 2018 Koolen, J., van Gorp, M. and

van Ossenbruggen, J. “Lessons Learned from a Digital Tool

Criticism Workshop”. Proceedings from DH Benelux

2018. Amsterdam, The Netherlands (2018).

Kuhn 2019 Kuhn, J. “Computational Text Analysis Within the Humanities: How to Combine Working

Practices from the Contributing Fields?”.

Language Resources and Evaluation 53(4): 565–602. doi:

https://doi.org/10.1007/s10579-019-09459-3 Lafon and Muller 1984 Lafon, P. and Muller,

C. Dépouillements et Statistiques En Lexicométrie, Travaux

de linguistique quantitative, Slatkine/Champion, Genève/Paris

(1984).

Lau and Baldwin 2016 Lau, J.H. and Baldwin,

T. “The Sensitivity of Topic Coherence Evaluation to Topic

Cardinality”. Proceedings of the 2016 Conference

of the North American Chapter of the Association for Computational

Linguistics: Human Language Technologies. Association for

Computational Linguistics, San Diego, California (2016), pp. 483–487.

Martínez Cantón et al. 2017 Martínez

Cantón, C.I., Ruiz Fabo, P., González-Blanco García, E. and Poibeau, T. “Automatic enjambment detection as a new source of evidence in

Spanish versification”. Plotting Poetry : On

Mechanically-Enhanced Reading / Machiner La Poésie: Sur Les Lectures

Appareillées. Basel (2017).

McCarthy 2005 McCarthy, W. Humanities Computing, Palgrave Macmillan UK (2005).

Millson 2010 Millson, D. (ed) Experimentation and Interpretation: The Use of Experimental

Archaeology in the Study of the Past. Oxbow Books (2010).

Mitrofanova 2015 Mitrofanova, O. “Probabilistic Topic Modeling of the Russian Text Corpus on

Musicology”. In: Eismont, P. and Konstantinova, N. (eds.). Language, Music, and Computing. Springer International

Publishing, Cham (2015), pp. 69–76.

Moore 1995 Moore, C. Apollinaire en 1908, la Poétique de l'Enchantement: Une Lecture

d'Onirocritique, Paris, Minard (1995).

Noble 2018 Noble, S.U. Algorithms of Oppression: How Search Engines Reinforce Racism, New

York University Press, New York University.

Open Science Collaboration 2015 Open Science Collaboration. (2015). “Estimating the

reproducibility of psychological science”.

Science, 349(6251), aac4716–aac4716. doi:

https://doi.org/10.1126/science.aac4716 O’Neil 2016 O’Neil, C. Weapons

of Math Destruction: How Big Data Increases Inequality and Threatens

Democracy, 1 edition., Crown, New York (2016).

Pavlova and Fischer 2018 Pavlova, I. and

Fischer, F. “Topic Modeling 200 Years of Russian

Drama”. Proceedings of the EADH

Conference (2018).

Percillier 2017 Percillier, M. “Creating and Analyzing Literary Corpora”. Data Analytics in Digital Humanities. Springer, Cham

(2017), pp. 91–118.

Pilshchikov and Starostin 2015 Pilshchikov, I. and Starostin, A. “Reconnaissance

Automatique des Mètres des Vers Russes : Une Approche Statistique sur

Corpus”.

Langages 199(3):89–106. doi:

https://doi.org/10.3917/lang.199.0089 Pincemin 2011 Pincemin, B. “Analyse Stylistique Différentielle à Base de Marqueurs et

Textométrie”. In: Garric, N. and Maurel-Indart, H. (eds.). Vers Une Automatisation de l’analyse Textuelle. texto ! Textes

and Cultures, Volume XV - n°4 et XVI - n°1 (2011), pp. 54–61.

Piper 2018 Piper, A. Enumerations, The University of Chicago Press, Chicago

(2018).

Plecháč and Kolár 2017 Plecháč, P. and

Kolár, R. Kapitoly z Korpusové Versologie,

Akropolis, Prague (2017).

Popper 2002 Popper, K.R. Conjectures and Refutations: The Growth of Scientific Knowledge,

3rd ed. revised., Routledge and Kegan Paul, London (2002).

Queneau 1961 Queneau, R. Petite Cosmogonie Portative, Gallimard, Paris (1961).

Rastier 2002 Rastier, F. “Enjeux épistémologiques de la linguistique de corpus”. In: G, W.

(ed.). Deuxièmes Journées de La Linguistique de

Corpus. Presses Universitaires de Rennes, Lorient, France (2002),

pp. 31–46.

Rebora et al. 2019 Rebora, S., Herrmann, J.B.,

Lauer, G. and Salgaro, M. “Robert Musil, a war journal, and

stylometry: Tackling the issue of short texts in authorship

attribution”.

Digital Scholarship in the

Humanities 34(3):582–605. doi:

https://doi.org/10.1093/llc/fqy055 Rockwell and Sinclair 2016 Rockwell, G.

and Sinclair, S. Hermeneutica: Computer-Assisted

Interpretation in the Humanities, MIT Press (2016).

Schöch 2017 Schöch, C. “Topic

Modeling Genre: An Exploration of French Classical and Enlightenment

Drama”. Digital Humanities Quarterly

11(2).

Simmler et al. 2019 Simmler, S., Vitt, T. and

Pielström, S. “Topic Modeling with Interactive

Visualizations in a GUI Tool”. Proceedings of

the 2019 Digital Humanities Conference. Utrecht (2019).

Simpson 2004 Simpson, P. Stylistics. A Resource Book for Students, Routledge English

language introductions, Routledge, London [u.a.] (2004).

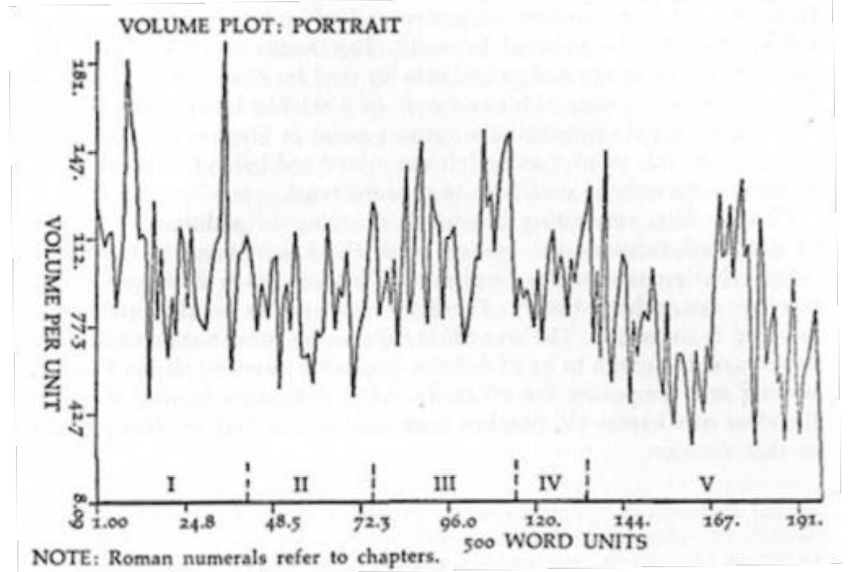

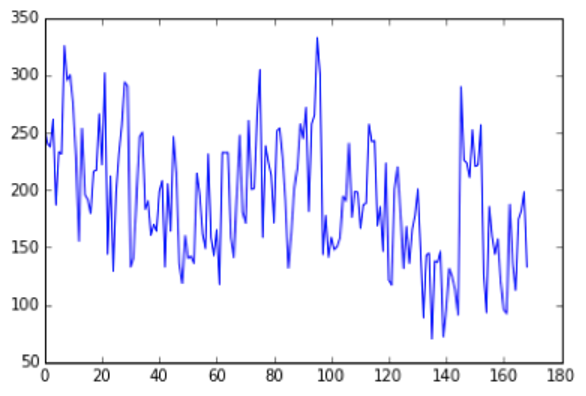

Smith 1973 Smith, J.B. “Image

and Imagery in Joyce’s Portrait: A Computer-Assisted Analysis”.

Directions in Literary Criticism: Contemporary

Approaches to Literature. The Pennsylvania State University Press,

University Park, PA (1973), pp. 220–227.

Smith 1978 Smith, J.B. “Computer Criticism”. Style

XII(4):326–356.

Smith 1980 Smith, J.B. Imagery

and the Mind of Stephen Dedalus: A Computer-Assisted Study of Joyce’s A Portrait of the Artist as a Young Man,

Bucknell University Press, Lewisburg, PA (1980).

Smith 1984 Smith, J.B. “A New

Environment For Literary Analysis”. Perspectives

in Computing 4(2/3):20–31.

Stamatatos et al. 2014 Stamatatos, E.,

Daelemans, W., Verhoeven, B., Potthast, M., Stein, B., Juola, P., Sanchez-Perez,

M.A. and Barrón-Cedeño, A. “Overview of the Author

Identification Task at PAN 2014”. Working Notes

Papers of the CLEF 2014 Evaluation Labs. CEUR Workshop Proceedings,

877-897 (2014).

Steen et al. 2010 Steen, G.J., Dorst, A.G.,

Herrmann, J.B., Kaal, A.A., Tina, Krennmayr. and Pasma, T. A Method for Linguistic Metaphor Identification: From MIP to MIPVU,

John Benjamins, Amsterdam and Philadelphia (2010).

Steyvers and Griffiths 2007 Steyvers,

M. and Griffiths, T. “Probabilistic Topic Models”.

Latent Semantic Analysis: A Road to Meaning.

Laurence Erlbaum (2007), pp. 424–440.

Suppes 1968 Suppes, P. “The

Desirability of Formalization in Science”. The

Journal of Philosophy, 65(20), 651–664 (1968).

Terras et al. 2013 Terras, M., Vanhoutte, E. and

Nyhan, J. Defining Digital Humanities: A Reader,

Routledge, London/New York (2013).

Traub and van Ossenbruggen 2015 Traub, M.C. and van Ossenbruggen, J. Workshop on Tool

Criticism in the Digital Humanities - Report, CWI Techreport,

Amsterdam (2015).

Underwood 2019 Underwood, T. Distant Horizons: Digital Evidence and Literary

Change, First edition., University of Chicago Press, Chicago

(2019).

Unsworth 2000 Unsworth, J. “Scholarly Primitives: What Methods do Humanities Researchers Have in

Common, and How Might our Tools Reflect This”. Symposium on Humanities Computing: Formal Methods, Experimental

Practice. King’s College, London. (2000).

Weizenbaum 1984 Weizenbaum, J. Computer Power and Human Reason: From Judgment to

Calculation. Penguin, Harmondsworth. (1984).

Winko 2015 Winko, S. “Zur

Plausibilität als Beurteilungskriterium literaturwissenschaftlicher

Interpretationen”.

Theorien, Methoden und

Praktiken des Interpretierens. De Gruyter, Berlin, Boston (2015),

pp. 483–511. doi:

https://doi.org/10.1515/9783110353983.483 van Cranenburgh 2012 van Cranenburgh, A.

“Literary Authorship Attribution With Phrase-Structure

Fragments”. Proceedings of the NAACL-HLT 2012

Workshop on Computational Linguistics for Literature. Association

for Computational Linguistics, Montréal, Canada (2012), pp. 59–63.

van Es et al. 2018 van Es, K., Wieringa, M. and

Schäfer, M.T. “Tool Criticism: From Digital Methods to

Digital Methodology”.

Proceedings of the 2nd

International Conference on Web Studies. ACM, New York, NY, USA

(2018), pp. 24–27. doi:

http://doi.acm.org/10.1145/3240431.3240436