Works Cited

ADHO 2019 Alliance of Digital Humanities Organizations

2019. Available from:

http://www.adho.org/.(Accessed November 21, 2019).

Arnold and Tilton 2019 Arnold, T. and Tilton, L.

“Distant viewing: analyzing large visual corpora,”

Digital Scholarship in the Humanities, fqz013, Oxford

University Press, Oxford, 0:0 (2019).

Arnold et al. 2013 Arnold, M., Bell, P. and Ommer, B.

“Automated Learning of Self-Similarity and Informative

Structures in Architecture,”

Scientific Computing & Cultural Heritage

(2013).

Bar 2009 Bar, M. “The Proactive

Brain: Memory for Predictions,”

Philosophical Transaction of the Royal Society, The

Royal Society Publishing, London, 364 (2009): 1235-1243.

Bautista et al. 2016 Bautista, M. A., Sanakoyeu, S.,

Tikhoncheva, E. and Ommer, B. “Cliquecnn: Deep unsupervised

exemplar learning.” In Advances in Neural Information

Processing Systems, NIPS, Barcelona, December 2016, 3846-3854.

Benjamin 1969 Benjamin, W. “The

Artwork in the Age of Mechanical Reproduction.” In Illuminations: Essays and Reflections, ed. Hannah Arendt. trans. Harry

Zohn, New York, Schocken Books, (1969): 52-78.

Bishop 2018 Bishop, C. “Against

Digital Art History,”

International Journal for Digital Art History 3 (2018):

123-133.

Bonfiglioli and Nanni 2015 Bonfiglioli, R. and

Nanni, F. “From close to distant and back: how to read with the

help of machines.” In International Conference on the

History and Philosophy of Computing, Pisa, October 2015, 87-100.

Chen 1976 Chen, P. P.-S. “The

Entity-Relationship Model – Toward a Unified View of Data”, ACM Transactions on Database Systems, Association for

Computing Machinery, New York, 1:1 (March 1976): 9-36.

Codd 1970 Codd, E.F. “A relational

model of data for large shared data banks”, Communications of the ACM, Association for Computing Machinery, New

York, 13:6 (1970): 377-387.

Cornell University Library 2016a Cornell University

Library 2016, “Mnemosyne. Meandering through Aby Warburg’s

Atlas,” Cornell University. Available from:

https://warburg.library.cornell.edu/about. (Accessed November 18,

2019).

Cornell University Library Home 2016b Cornell

University Library 2016, “Mnemosyne. Meandering through Aby

Warburg’s Atlas,” Cornell University. Available from:

https://warburg.library.cornell.edu/. (Accessed November 20, 2019).

Crowley and Zisserman 2016 Crowley, E.J. and

Zisserman, A. “The art of detection.” In Proceedings of the European Conference on Computer Vision,

ECCV, Amsterdam, October 2016, 721-737.

Doersch 2016 Doersch, C. “Tutorial on variational autoencoders” arXiv preprint

arXiv:1606.05908 (2016).

Drucker 2013 Drucker, J. “Is

There a ‘Digital’ Art History?,”

Visual Resources, 29 (2013): 5-13.

Eigenstetter et al. 2014 Eigenstetter, A.,

Takami, M. and Ommer, B. “Randomized Max-Margin Compositions for

Visual Recognition.” In Proceedings of the Conference

on Computer Vision and Pattern Recognition, CVPR, Columbus, Ohio, June

2014, 3590-3597.

Elgammal et al. 2018a Elgammal, A., Kang, Y. and

Leeuw, M.D. “Picasso, Matisse, or a Fake? Automated Analysis of

Drawings at the Stroke Level for Attribution and Authentication.” In Thirty-Second AAAI Conference on Artificial Intelligence,

New Orleans, February 2018.

Elgammal et al. 2018b Elgammal, A., Liu, B., Kim,

D., Elhoseiny, M. and Mazzone, M. “The shape of art history in

the eyes of the machine.” In Thirty-Second AAAI

Conference on Artificial Intelligence, New Orleans, April 2018.

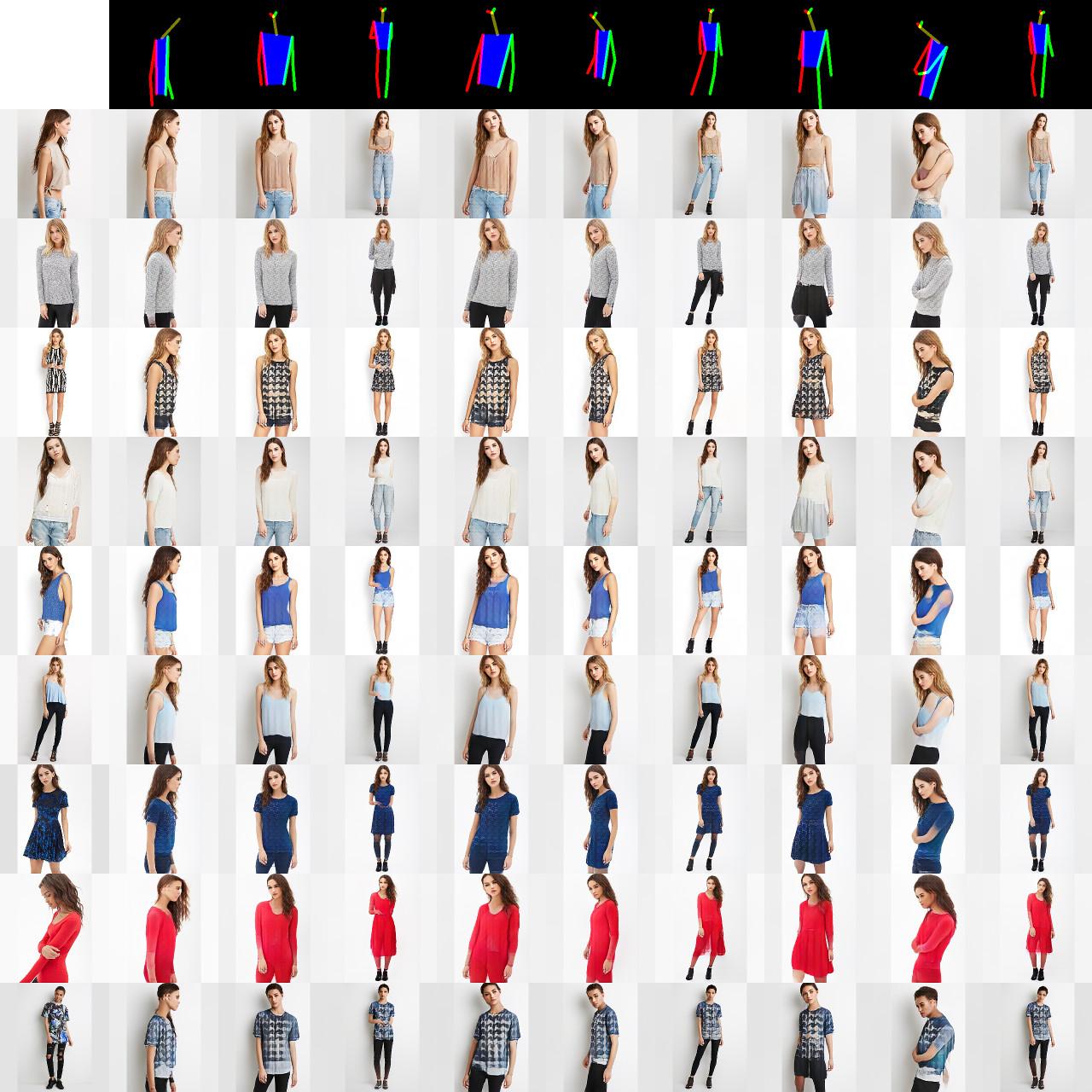

Esser et al. 2018a Esser, P., Haux, J., Milbich, T.

and Ommer, B. “Towards Learning a Realistic Rendering of Human

Behavior.” In Proceedings of the European Conference

on Computer Vision, ECCV, workshops, Munich, September 2018,

409-425.

Esser et al. VU-Net 2018b Esser, P., Sutter, E. and

Ommer, B. “A Variational U-Net for Conditional Appearance and

Shape Generation.” In Proceedings of the IEEE

Conference on Computer Vision and Pattern Recognition, CVPR, Salt Lake

City, Utah, June 2018, 8857-8866.

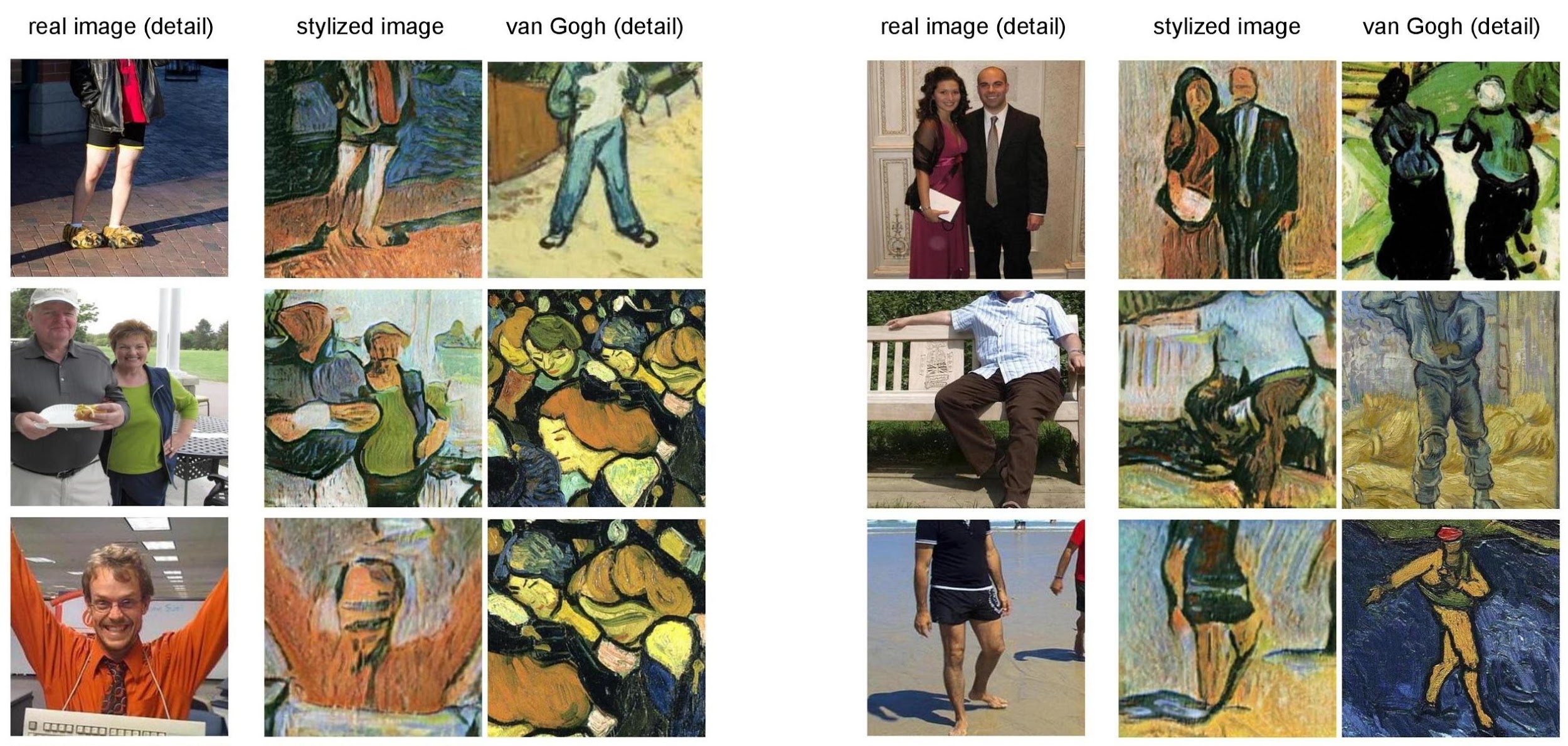

Gatys et al. 2015 Gatys, L.A., Ecker, A.S. and Bethge,

M. “A neural algorithm of artistic style” arXiv preprint

arXiv:1508.06576 (2015).

Gatys et al. 2016 Gatys, L.A., Ecker, A.S. and Bethge,

M. “Image style transfer using convolutional neural

networks.” In Proceedings of the IEEE Conference on

Computer Vision and Pattern Recognition, CVPR, Las Vegas, Nevada, June,

July 2016, 2414-2423.

Girshick 2015 Girshick, R. “Fast r-cnn.” In Proceedings of the IEEE international

conference on computer vision, ICCV, Las Condes, Chile, December 2015,

1440-1448.

Gogolla 1994 Gogolla, M. “An

extended entity-relationship model: fundamentals and pragmatics.” Vol. 767.

Springer, Heidelberg (1994).

Goodfellow et al. 2014 Goodfellow, I.,

Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A. and

Bengio, Y. “Generative adversarial nets.” In Advances in Neural Information Processing Systems, NIPS,

Montreal, December 2014, 2672-2680.

Goodfellow et al. 2016 Goodfellow, I., Bengio, Y.,

Courville, A. Deep Learning. MIT Press, Cambridge,

London (2016).

Hatt/Klonk 2006 Hatt, M. and Klonk, C. Art History. A Critical Introduction to its Methods.

Manchester University Press, Manchester (2006).

Hertzmann 2019 Hertzmann, A. “Aesthetics of Neural Network Art” arXiv preprint arXiv:1903.05696 (March

2019).

Hristova 2016 Hristova, S. “Images as data: cultural analytics and Aby Warburg’s Mnemosyne,”

International Journal for Digital Art History, 2

(2016).

Hu et al. 2018 Hu, H., Gu, J., Zhang, Z., Dai, J., Wei, Y.

“Relation networks for object detection.” In Proceedings of the IEEE Conference on Computer Vision and Pattern

Recognition, CVPR, Salt Lake City, June 2018, 3588-3597.

Huang and Belongie 2017 Huang, X. and Belongie, S.J.

“Arbitrary Style Transfer in Real-Time with Adaptive Instance

Normalization.” In Proceedings of the International

Conference on Computer Vision, ICCV, Venice, February 2017,

1510-1519.

Hvattum and Hermansen 2004 Hvattum, M. and Hermansen,

C. Tracing Modernity: Manifestations of the Modern in

Architecture and the City. Routledge, New York, London (2004).

Impett and Süsstrunk 2016 Impett, L., and Süsstrunk,

S. “Pose and pathosformel in Aby Warburg’s bilderatlas.”

In Proceedings of the European Conference on Computer

Vision, ECCV, Amsterdam, October 2016, 888-902.

Isola et al. 2018 Isola, P., Zhu, J.Y., Zhou, T. and

Efros, A. A. “Image-to-Image Translation with Conditional

Adversarial Networks.” In Proceedings of the IEEE

Conference on Computer Vision and Pattern Recognition, CVPR, Salt Lake

City, Utah, June 2018, 1125-1134.

Johnson 2012 Johnson, C. Memory,

Metaphor, and Aby Warburg’s Atlas of Images. Cornell University Press,

Ithaca (2012).

Johnson et al. 2016 Johnson, J., Alahi, A. and

Fei-Fei, L. “Perceptual losses for real-time style transfer and

super-resolution.” In Proceedings of the European

Conference on Computer Vision, ECCV, Amsterdam, October 2016,

694-711.

Kalkstein 2019 Kalkstein, M. “Aby Warburg's Mnemosyne Atlas: On Photography, Archives, and the Afterlife of

Images,” Rutgers Art Review: The Journal of Graduate

Research in Art History, Rutgers School of Arts and Sciences, New

Brunswick, 35 (2019): 50-73.

Karayev et al. 2013 Karayev, S., Trentacoste, M.,

Han, H., Agarwala, A., Darrell, T., Hertzmann, A. and Winnemoeller, H. “Recognizing image style” arXiv preprint arXiv:1311.3715

(2013).

Kienle 2017 Kienle, M. “Digital

Art History: Beyond the Digitized Slide Library. An Interview with Johanna Drucker

and Miriam Posner,”

Artl@ s Bulletin, 6.3:9 (2017).

Kim et al. 2019 Kim, S., Min, D., Jeong,S ., Kim, S.,

Jeon, S. and Sohn, K. “Semantic Attribute Matching

Networks.” In Proceedings of the IEEE Conference on

Computer Vision and Pattern Recognition, CVPR, Long Beach, June 2019,

Diego, 12339-12348.

Kotovenko et al. 2019a Kotovenko, D., Sanakoyeu,

A., Lang, S. and Ommer, B. “Content and style disentanglement for

artistic style transfer.” In Proceedings of the IEEE

International Conference on Computer Vision, ICCV, Seoul, Korea,

October/November 2019, 4422-4431.

Kotovenko et al. 2019b Kotovenko, D., Sanakoyeu,

A., Lang, S. and Ommer, B. “A Content Transformation Block for

Image Style Transfer.” In Proceedings of the IEEE

Conference on Computer Vision and Pattern Recognition, CVPR, Long Beach,

California, June 2019, 10032-10041.

Krizhevsky et al. 2012 Krizhevsky, A., Sutskever,

I., and Hinton, G.E. “Imagenet classification with deep

convolutional neural networks.” In Advances in neural

information processing systems, NIPS, Lake Tahoe, December 2012,

1097-1105.

Krogh 2008 Krogh, A. “What are

artificial neural networks?”

Nature Biotechnology, Nature Publishing Group, London,

26 (February 2008): 195-197.

Lang and Ommer 2018a Lang, S. and Ommer, B. “Attesting Similarity: Supporting the Organization and Study of Art

Image Collections with Computer Vision,”

Digital Scholarship in the Humanities, Oxford University

Press, Oxford, 33:4 (2018): 845-856.

Lang and Ommer 2018b Lang, S. and Ommer, B. “Reconstructing Histories: Analyzing Exhibition Photographs with

Computational Methods,”

Arts, Computational Aesthetics, 7:64 (2018).

Lang and Ommer 2018c Lang, S. and Ommer, B. “Reflecting on How Artworks Are Processed and Analyzed by Computer

Vision.” In Proceedings of the European Conference on

Computer Vision, ECCV, workshops, Munich, September 2018, 647-652.

LeCun et al. 2015 LeCun, Y., Bengio, Y. and Hinton, G.

“Deep Learning,”

Nature, Nature Publishing Group, London, 521 (May 2015):

436-444.

Li et al. 2012 Li, J., Yao, L., Hendriks, E. and Wang,

J.Z. “Rhythmic brushstrokes distinguish van Gogh from his

contemporaries: findings via automated brushstroke extraction.” In IEEE Transactions on Pattern Analysis and Machine

Intelligence, 34.6 (2012), 1159-1176.

Lorenz et al. 2019 Lorenz, D., Bereska, L., Milbich,

T., and Ommer, B. “Unsupervised Part-Based Disentangling of

Object Shape and Appearance.” In Proceedings of the

IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Long

Beach, June 2019, 10955-10964.

Manovich 2012 Manovich, L. “How

to compare one million images?”

Understanding Digital Humanities, Palgrave Macmillan,

London (2012): 249-278.

Monroy et al. 2011 Monroy, A., Eigenstetter, A. and

Ommer, B. “Beyond Straight Lines – Object Detection Using

Curvature.” In Proceedings of the International

Conference on Image Processing, Brussels, September 2011, 3561-3564.

Postel 2017 Postel, J.P. Der Fall

Arnolfini. Auf Spurensuche in einem Gemälde von Jan van Eyck. Verlag

Freies Geistesleben, Stuttgart (2017).

Redmon et al. 2016 Redmon, J., Divvala, S., Girshick,

R. and Farhadi, A. “You only look once: Unified, real-time object

detection.” In Proceedings of the IEEE conference on

computer vision and pattern recognition, CVPR, Las Vegas, June/July 2016,

779-788.

Ren et al. 2015 Ren, S., He, K., Girshick, R. and Sun, J.

“Faster R-CNN: Towards real-time object detection with region

proposal networks.” In Proceedings of the Advances in

Neural-Information Systems, NIPS, Montreal, December 2015, 91-99.

Saleh and Elgammal 2015 Saleh, B. and Elgammal, A.

“Large-scale Classification of Fine-Art Paintings: Learning

the Right Metric on the Right Feature,”

International Journal for Digital Art History, 2

(2015).

Saleh et al. 2016 Saleh, B., Abe, K., Arora, R.S. and

Elgammal, A. “Toward automated discovery of artistic

influence,”

Multimedia Tools and Applications, Springer, Heidelberg,

75:7 (2016): 3565-3591.

Sanakoyeu et al. 2018 Sanakoyeu, A., Kotovenko, D.,

Lang, S. and Ommer, B. “A Style-Aware Content Loss for Real-time

HD Style Transfer.” In Proceedings of the European

Conference on Computer Vision, ECCV, Munich, September 2018,

698-714.

Schlecht et al. 2011 Schlecht, J., Carqué, B. and

Ommer, B. “Detecting Gestures in Medieval Images.” In

Proceedings of the International Conference on Image

Processing, ICIP, Brussels, September 2011, 1285 – 1288.

Seguin et al. 2016 Seguin, B., Striolo, C. and Kaplan,

F. “Visual link retrieval in a database of paintings.” In

Proceedings of the European Conference on Computer

Vision, Workshop, ECCV, Zurich, September 2016, 753-767.

Shen et al. 2019 Shen, X., Efros, A. A. and Aubry, M.

“Discovering Visual Patterns in Art Collections with

Spatially-consistent Feature Learning,” arXiv preprint arXiv:1903.02678

(March 2019).

Simonyan and Zisserman 2015 Simonyan, K. and

Zisserman, A. “Very deep convolutional networks for large-scale

image recognition.” In International Conference on

Learning Representations, ICLR, San Diego, May 2015, 1-14.

Spratt and Elgammal 2014 Spratt, E. L. and Elgammal,

A. “The Digital Humanities Unveiled: Perceptions Held by Art

Historians and Computer Scientists about Computer Vision Technology” arXiv

preprint arXiv:1411.6714 (2014).

Stork and Johnson 2006 Stork, D. G., and Johnson, M. K.

“Estimating the location of illuminants in realist master

paintings Computer image analysis addresses a debate in art history of the

Baroque.” In International Conference on Pattern

Recognition, ICPR, Hongkong, August 2006, 255-258.

Takami et al. 2014 Takami, M., Bell, P. and Ommer, B.

“An Approach to Large Scale Interactive Retrieval of Cultural

Heritage.” In Eurographics Workshop on Graphics and

Cultural Heritage, Darmstadt, October 2014, 87-95.

Teorey et al. 1986 Teorey, T. J., Dongqing, Y., and

Fry, J. P. “A logical design methodology for relational databases

using the extended entity-relationship model,” ACM

Computing Surveys (CSUR), Association for Computing Machinery, New York,

18:2 (1986): 197-222.

The Next Rembrandt 2016 The Next Rembrandt, ING, Microsoft, TU Delft, Mauritshuis, The Rembrandt

House Museum. Available from:

https://www.nextrembrandt.com/. (Accessed November 26, 2019).

Ufer et al. 2019 Ufer, N., Lui, K.T., Schwarz, K.,

Warkentin, P. and Ommer, B. “Weakly Supervised Learning of Dense

Semantic Correspondences and Segmentation.” In German

Conference on Pattern Recognition, GCPR, Dortmund, September 2019,

456-470.

Wang et al. 2017 Wang, F., Jiang, M., Qian, C., Yang,

S., Li, C., Zhang, H., Wang, X. and Tang, X. “Residual attention

network for image classification.” In Proceedings of

the IEEE Conference on Computer Vision and Pattern Recognition, CVPR,

Honolulu, July 2017, 3156-3164.

Warburg and Rampley 2009 Warburg, A, and Rampley, M.

“The absorption of the expressive values of the past,”

Art in Translation, Taylor & Francis,

London, 1:2 (2009): 273-283.

Warnke 2000 Warnke, M. “Aby

Warburg. Der Bilderatlas Mnemosyne.” In H. Bredekamp, M. Diers, K.W.

Forster, N. Mann, S. Settis, and M. Warnke (eds), Aby

Warburg. Gesammelte Schriften, Vol. 2,1, Berlin (2000).

West 2011 West, M. “Developing high

quality data models.” Elsevier, Amsterdam (2011).

Xu et al. 2015 Xu, K. et al. “Show,

attend and tell: Neural image caption generation with visual attention.” In

International conference on machine learning, ICML,

Lille, July 2015, 2048-2057.

Yarlagadda et al. 2013 Yarlagadda, P., Monroy, A.,

Carque, B. and Ommer, B. “Towards a Computer-Based Understanding

of Medieval Images.” In H. G. Bock, W. Jaeger, M. J. Winckler (eds), Scientific Computing and Cultural Heritage, Springer,

Heidelberg (2013): 89-97.

Zhou et al. 2018 Zhou, B., Lapedriza, A., Khosla, A.,

Oliva, A., and Torralba, A. “Places: A 10 million image database

for scene recognition,”

IEEE transactions on pattern analysis and machine

intelligence, PAMI, 40:6 (2018): 1452-1464.