Volume 17 Number 2

Nonsense Code: A Nonmaterial Performance

Abstract

Critical Code Studies often relies on the textual representation of code in order to derive extra-textual significance, with less focus on how code performs and in what contexts. In this paper we analyze three case studies in which a literal reading of each program’s code is effectively nonsense. In their performance, however, the programs generate meaning. To discern this meaning, we use the framework of nonmaterial performance (NMP), which is based on four tenets: code abstracts, code performs, code acts within a network, and code is vibrant. We begin with what is to our knowledge the oldest example of nonsense code: a program (now lost) from the 1950s that caused a Univac 1 computer to hum “Happy Birthday”. Second, we critique Firestarter, a processor stress test from the Technical University of Dresden. Finally, we analyze one of the family of processor power side-channel attacks known collectively as Platypus. In each case, the text of the code is a wholly unreliable guide to its extra-textual significance. This paper builds on work in Critical Code Studies by bringing in methodologies from actor-network theory and political science, examining code from a performance-studies perspective and with expertise from computer science. Code can certainly be read as literature, but ultimately it is text written to be performed. Imagining and observing the performance forces the critic to engage with the code in its own network. The three examples we have chosen to critique here are outliers---very little code in the world is purposed to manipulate the physical machine. Nonsense shows us the opportunity that nonmaterial performance creates: to decenter text from privileged position and to recenter code as a performance.

1. Happy Birthday, Nonsense, and Nonmaterial Performance

2. Nonmaterial Performance

Code Abstracts

Code Performs

3. Nonsense Code

4. Firestarter

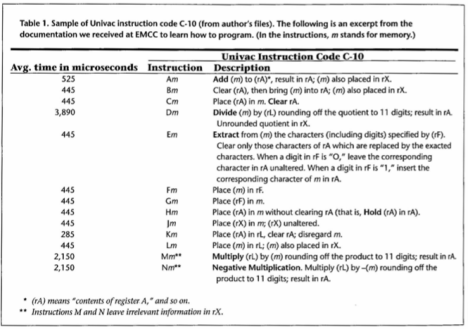

- Author: Daniel Hackenberg

- Citation: D. Hackenberg, R. Oldenburg, D. Molka and R. Schöne, “Introducing Firestarter: A processor stress test utility,” 2013 International Green Computing Conference Proceedings, Arlington, VA, USA, 2013, pp. 1-9, doi:10.1109/IGCC.2013.6604507.

- Version: 1.7.4 (1.0 released in 2013, 2.0 released in 2021)

- Source: https://github.com/tud-zih-energy/Firestarter

- Invocation: ./Firestarter — timeout=60 –report

-

Technical Description:

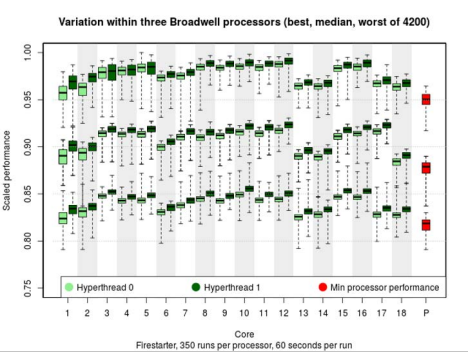

Firestarter is a processor stress test, essentially a program that makes the

processor work as hard as possible in order to determine how the processor will

behave under high load, for example, testing cooling systems at maximum power

[Hackenberg et al. 2013]. Prior to the creation of Firestarter, best

practice was to use numerically intensive codes such as Prime95 or LINPACK.

These codes, however, were written to solve mathematical problems, and only

incidentally required a large amount of power. Firestarter is designed to

consume maximum power — and nothing else. As such it can reach both higher and

more consistent levels of power consumption, thereby producing more reliable

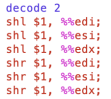

results. Firestarter’s code is a carefully constructed mass of assembly

language instructions that work nearly all of the components of the processor

simultaneously and as hard as possible — without calculating anything useful at

all. Firestarter thus meets our definition of nonsense: its purpose is to

change the state of the physical processor using the side effects of the

available assembly language instructions.

Critique

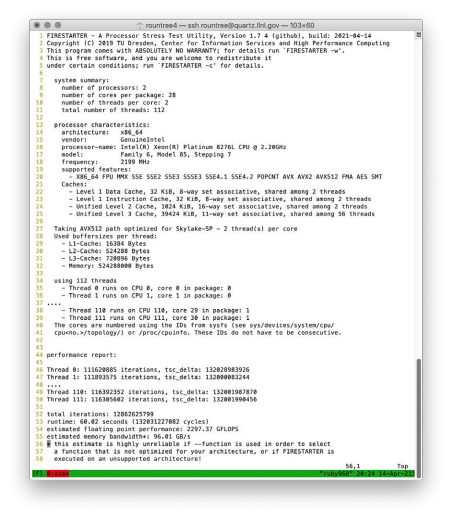

| Number of cores | Units of work completed in 60 seconds |

| 1 | 1.0 |

| 14 | 9.9 |

| 28 | 13.3 |

| 56 | 27.0 |

| 112 | 24.7 |

5. Platypus

- Author: Moritz Lipp et al.

- Citation: “PLATYPUS: Software-based Power Side-Channel Attacks on x86”, 2021 IEEE Symposium on Security and Privacy.

- URL: https://platypusattack.com

- Source: Proof-of-concept code has not been released.

Safecrackers and Side-Channel Attacks

To understand side-channel attacks, consider state-of-the-art safecracking in 1950: Combination locks are simple. Discs with slots are aligned until a lever falls into the slots. Then the lock opens. The moving discs and the falling lever make a noise. Legendary cracksmen filed their fingertips to the quick and felt the movement. So locks were refined. Then cracksmen used stethoscopes to listen to the movement. Locks were then made too smooth for that device.

But the war brought on the development of electronic detection of supersonic sound. In any radio store you can buy the apparatus. Cracksmen use an aerial the size of a knitting needle some six inches high set in a base the size of a biscuit. Push it against the safe dial. The sound is picked up, amplified in a box about six by four by two inches in size and recorded on a dial like the ammeter in a car.

What happens inside the combination lock is read as easily as an electro cardiograph by a physician. Which means it is not easy, but it can be done by an expert. [Winget 1950]

SGX and the Cloud

RAPL

APIC

The Attack

Critique

Code Acts within Network

6. Conclusion

-

Code abstracts:

Languages allow programmers to work with a simpler world than physical

reality.

-

Code performs:

Unlike mathematics, code has an embodied physicality of heat, energy, and

resonance.

-

Code acts within a network:

The network in which the code performs is not fixed, and meaning accrues in the

juxtapositions of its actants. If one changes the network, the meaning

changes.

-

Code is vibrant:

Code cannot be nailed to a single meaning. Meaning is generated within a

particular relationship of a particular set of actants, including text,

machine, and people. Vibrancy describes what happens as the actants and their

relationships change.