Volume 15 Number 1

From the Presupposition of Doom to the Manifestation of Code: Using Emulated Citation in the Study of Games and Cultural Software

Abstract

For the field of game history to mature, and for game studies more broadly to function in a scholarly manner in the coming decades, one necessity will be improvement of game citation practices. Current practices have some obvious problems, such as a lack of standardization even within the same journal or book series. But a more pressing problem is disguised by the field’s youth: Common citation practices depend on the play experiences and cultural knowledge of a generation of game studies scholars and readers who are largely old enough to have lived through the eras they are discussing. More sustainable and precise alternatives cannot fall back on the tools available for fixed media — such as the direct quotations and page numbers used for books or the screenshots (of images that appear to all viewers) and timecode used for video. Instead, this essay imagines an alternative approach, working in the digital humanities traditions of speculative collections and tool-based argumentation. In the speculative future we present, there are scholarly collections of software, as well as tools available for citing software states and integrating these citations into scholarly arguments. A working prototype of such a tool is presented, together with examples of scholarly use and the results of an evaluation of the concept with game scholars.

1 The Changing Archive

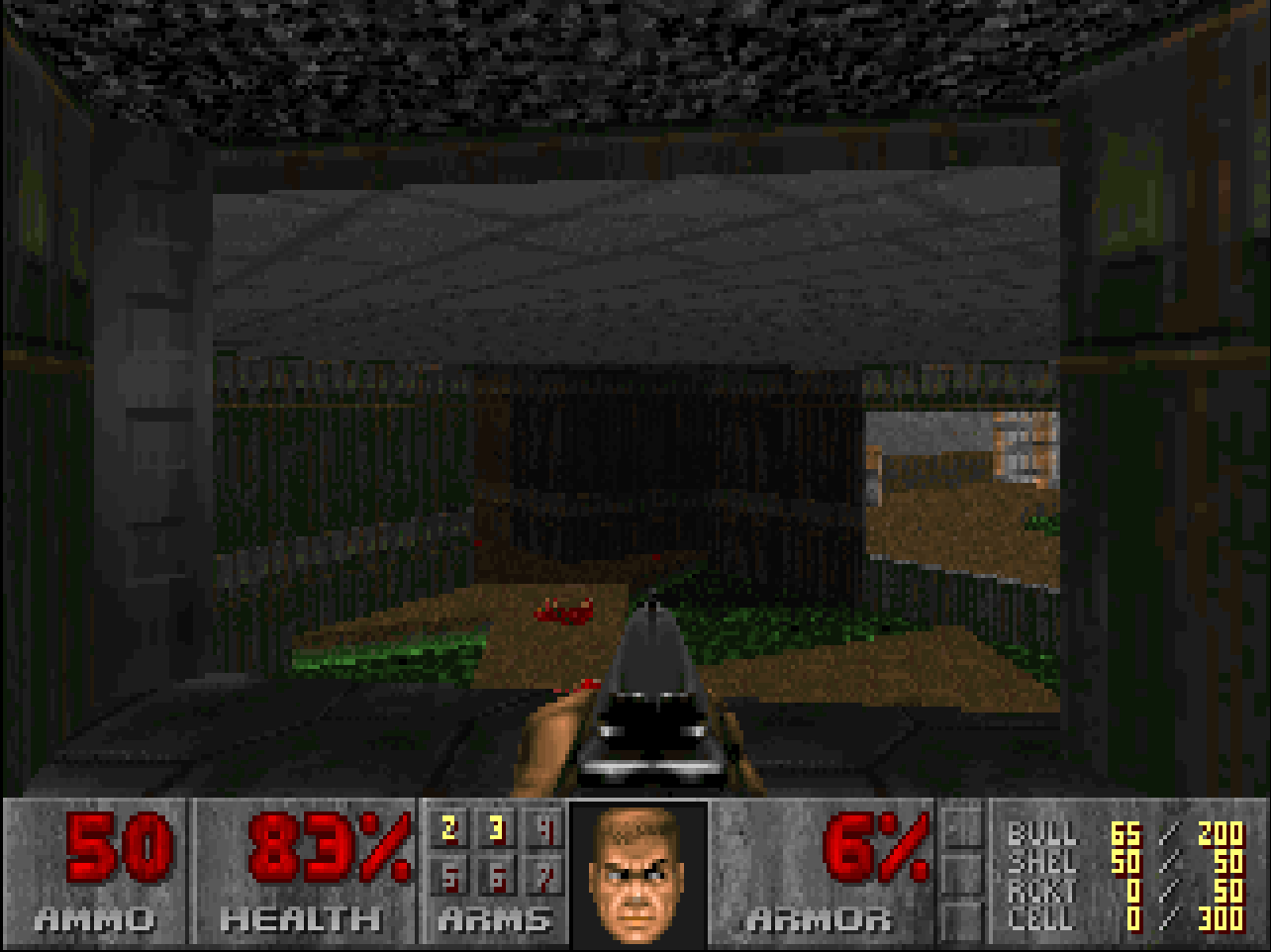

This passage presupposes comparative knowledge of other early first-person shooters and assumes that the reader either accepts Pinchbeck's descriptions at face value or will do the legwork required to find a copy of the game, load it into emulation software (or find a computer capable of running it!), and reproduce Pinchbeck's referenced objects, sites, and narrative arc. This also puts a greater obstacle in the reader's path: Is the reader sufficiently dextrous and committed to complete the demo chapter and find the secrets? Whom does this type of citation include and exclude? Moreover, for game studies more broadly, it is time-consuming, tedious, and space-intensive to give detailed accounts of even a single linear playthrough of a game.The color palette of browns and grays shouts a level of realism that Wolfenstein 3D didn’t get anywhere near. A barrel positioned temptingly in the center of the screen just aches to be shot at and delivers a meaty crunching explosion that scatters debris across the screen. . . . There are a number of linked secrets, establishing that opening up new areas involves not just finding and triggering buttons and trip wires but triggering things in sequence. In this case, we have a different-colored wall panel, dropping down into a passage that takes us to the lake of waste with the superarmor, then a trip wire in the final room that lowers the Imp platform, announcing dynamic vertical-level adjustment and opening up a little area with a shotgun and shells. . . . In the space of a few short and small secrets, the game trains the observant player or completionist to watch for wall discoloration, lines of light/shadow and new sectors as trip wires, raising and dropping platforms, and linked sequences. [Pinchbeck 2013, 69]

The color palette of browns and grays shouts a level of realism that Wolfenstein 3D didn't get anywhere near. [Editorial note: This first Wolfenstein 3D example unfortunately does not function on some browsers.] A barrel positioned temptingly in the center of the screen just aches to be shot at and delivers a meaty crunching explosion that scatters debris across the screen...The lights vary in this room - we can see the light from the large window to the right. When we pause by this window, the open areas seem huge, and we can see a lake of animated green goo. A glowing piece of armor sitting in the lake tells us straight away that we can leave the corridors and rooms and actually get outside...We skirt around to the left toward the pillars (which are throbbing and glowing with light) and head up the staircase to a plinth with animated scrolling textures, to collect some armor. On the way, a shaven-headed goon with a shotgun bellows at us. We fire, and he flies backward in a gush of blood, dropping the shotgun. We collect it and scoop up some blue health vials and some archaic metal helmets for an armor bonus, and we’re ready to go.

Other innovations introduced in the first level include multiple vertical levels included in the same area. In the third room we enter, Imps stand on a raised platform in the far corner, while Zombie soldiers advance along a walkway that zigzags over green radioactive waste. There are a number of linked secrets, establishing that opening up new areas involves not just finding and triggering buttons and trip wires but triggering things in sequence. In this case, we have a different-colored wall panel, dropping down into a passage that takes us to the lake of waste with the superarmor, then a trip wire in the final room that lowers the Imp platform, announcing dynamic vertical-level adjustment and opening up a little area with a shotgun and shells. Finally, moving back out of this area and toward the second room opens a timed lift in the corner of the secret shotgun area, which we can run back to before it raises again (and it only does this once; some secrets are nonrepeatable). The lift leads to a short corridor with a couple of small armor bonuses before delivering the real reward, a one-way wall with a view over the walkway room. In the space of a few short and small secrets, the game trains the observant player or completionist to watch for wall discoloration, lines of light/shadow and new sectors as trip wires, raising and dropping platforms, and linked sequences.

2 Citation

2.1 Citation in Use

2.2 Citation as Discourse

That we should pay attention to qualities of a textual surface — and the ways in which its imbricated texts do and do not comport with each other — motivates our framing for the discussion of the citation work below. If we are combining references in text to games, and other systems, based on non-discursive experiences, their constitutive intertextuality needs to be examined, along with its effects on the resulting historical discourse.[10] We may want the alignment to have a specific texture, but we also need to be aware that that texture, that surface, is something deserving of reflective consideration and thought. How do the citation of games and the juxtaposition of program and text affect the reader’s experience and comprehension of the argument? What does this do to the issues of presupposition and scholarly assumption? Below we discuss the current practice of game citation in light of both manifest intertextuality and presupposition.Texts vary a great deal in their degrees of heterogeneity . . . [they] also differ in the extent to which their heterogeneous elements are integrated, and so in the extent to which their heterogeneity is evident on the surface of the text. . . . Again, texts may or may not be “reaccentuated;” they may or may not be drawn into the prevailing key or tone (e.g., ironic or sentimental) of the surrounding text. Or again, the texts of others may or may not be merged into unattributed background assumptions of the text being presupposed. So a heterogeneous text may have an uneven and “bumpy” textual surface, or a relatively smooth one. [Fairclough 1992, 104]

3 Bibliography and Citation in Game Studies

Our familiarity with and access to videogames is taken for granted, since many of us are old enough to recall first-hand experience with the entire history of videogames — a claim that cannot be made by scholars of other media. There is an implicit assumption that we all know what a Super Mario Bros. cartridge looks like, so why bother with thorough descriptions? [Altice 2015, 334]Altice 2015, 334]

Altice’s claim of the lack of a “videogame bibliography” practice is not difficult to substantiate. As he states, many works that are intimately tied to the exploration of the material constraints and expressive potentials of technical artifacts do not share consistent bibliographic practices. Both works mentioned above, Matt Kirschenbaum’s Mechanisms — a treatise on the oft-overlooked ambiguities in the expression of digital data — and Ian Bogost and Nick Montfort’s Racing the Beam — a platform study into the inner workings of the Atari 2600 — come from the same publisher, are intimately involved with the technical distinctions of computer software, and do not share a consistent practice in their bibliographies [Kirschenbaum 2008] [Bogost and Montfort 2009].To claim that videogame bibliography demands a closer allegiance to the practices [of enumerative, and analytical bibliography] assumes that a unified practice called “videogame bibliography” even exists. At their best, videogame citations adhere to the barest enumerative models. Even in those texts that most seriously grapple with electronic artifacts as objects that exhibit physical properties worthy of description, such as Kirschenbaum’s Mechanisms or Montfort and Bogost’s Racing the Beam, videogames are still afforded scant bibliographic information. [Altice 2015, 333]

Computer game bibliography is distinct from that for other media forms mainly in the complex of technical requirements needed to retrieve the object. GAMECIP’s platform and format vocabularies, outlined in prior work [Kaltman et al. 2015], speak to Altice’s call in a limited way by attempting to codify and standardize some basic descriptive information for computer games. The larger issue, however, is that “rich bibliographic records require a baseline technical understanding of the objects they describe” [Altice 2015, 336].As a Famicom scholar, I may possess the terminology to describe that platform’s media but meanwhile lack the platform-specific knowledge to properly cite a PlayStation 2 game. . . . Granting each [reference] its due description poses a sizable research challenge. One solution is to build up a body of platform-specific descriptions that others may use as a model . . . but such shared knowledge will take time and work. [Altice 2015, 337]

3.1 Presupposition of DOOM!

These three paragraphs reference twelve games spanning a period from 1974 to 2011. Doom does not receive a full in-line citation since it is the topic of the book, and is addressed with in-line references in a previous section. Ignoring the general argument and focusing only on the citations and their relationship to the assertions being made on their behalf, we already encounter some significant issues.We need to consider the context into which DOOM arrived. The very first FPS game was Maze War, created by Steve Colley, Howard Palmer, and Greg Thompson (and other contributors) at the NASA Ames Research Center. Colley estimates that the first version was built during 1973, as an extension of the earlier game Maze, which offered a first-person exploration of a basic wireframe environment. At some point during ’73 or ’74, networked capability was added, enabling multiplayer FPS play. The genre was born out of networked deathmatching. After Thompson moved to MIT, he continued to develop Maze War, adding a server offering personalized games, increasing the number of players to eight, and adding simple bots to the mix. Twenty years before DOOM, all of the prototypical features of the FPS were in place: a 3D real-time environment, simple ludic activity (look, move, shoot, take damage), and a basic set of goals and win/lose conditions — all this and multiplayer networked combat.

Around the same time, Jim Bowery developed Spasim (1974), which he has claimed to be the very first 3D networked multiplayer game. Spasim pitted up to thirty-two players (eight players in four planetary systems) against one another over a network, with each taking control of a spaceship, viewed to other players as a wireframe. A second version expanded the gameplay from simple combat to include resource management and more strategic elements. Whether or not Bowery’s argument that Spasim precurses Maze War and represents the first FPS holds water, its importance as a game is undiminished — even if for no other reason than because Spasim is a clear spiritual ancestor of Elite (Braben and Bell 1984) and its many derivatives. It perhaps even prototypes a game concept that would later spin out into combat-oriented real-time strategy (RTS) or even massively multiplayer online (MMO) gaming.

What certainly differentiates Spasim from Maze War is the perspective. Like other early first-person games, such as BattleZone (Atari 1980) and id’s Hovertank 3D (1991), the game is essentially vehicular, with no representation of the avatar onscreen other than a crosshair. It is interesting that, aside from occasional titles such as Descent (Parallax 1995) and Forsaken (Probe Entertainment 1998), the genre very swiftly settled down into the avatar-based perspective, abandoning vehicular combat more or less completely. It’s also interesting that contemporary shooters often opt for a shift to third-person when including vehicles, such as with Halo: Combat Evolved (Bungie 2002) or Rage (id Software 2011). Half-Life 2’s (Valve Software 2004) first-person car sequences are actually quite unusual. [Pinchbeck 2013, 6]

4 Reduction and Intertextual Expression

links text users to a network of prior texts depending on their group membership, and provides a system of coding options for making meanings. Because they help to instantiate or construe the meaning potential of a disciplinary culture, the conventions developed in this way foreclose certain options and make some predictions about meanings possible. [Hyland 2000, 156]

The fact that writing is the dominant medium of academic discourse is not incidental; while pictorial subject matter is alien to written discourse, and requires a reduction to make it amenable to analysis, written subject matter can be iterated without any “gap” with the textual surface that analyzes it. [Lynch 1988, 201]

5 Types and Examples of Reduction

5.1 Video

5.2 Visualization

5.3 Emulation

Surely, it is often said, it is absurd to say that we cannot distinguish between a thing and what is said about that thing. But the constitutive view does not prohibit such distinctions. It offers us a way of seeing these distinctions as actively created achievements rather than as pre-given features of our world. In particular, the distinction between talk and objects-of-talk is seen from the constitutive perspective as the upshot, rather than the condition, of discursive work. [Woolgar 1986, 314]

6 Back to Citation and Archives

7 A Tool for Descriptive and Manifest Citation of Games

- The need for more consistent bibliographic citation information for computer games.

- An example use case for the placement and manipulation of various reductions of computer games into online text (in this case, images, videos and live emulation).

- The need for a managed archive of the reductions used in (2).

7.1 Game v Performance

7.2 Citation Tool

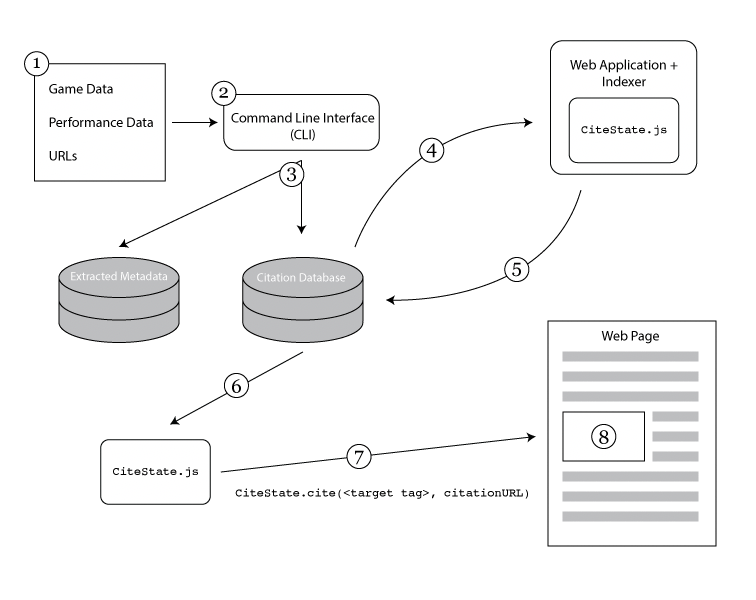

- A command line interface (CLI) responsible for the ingestion of game and performance data, the generation of citations, and the management of the citation database.

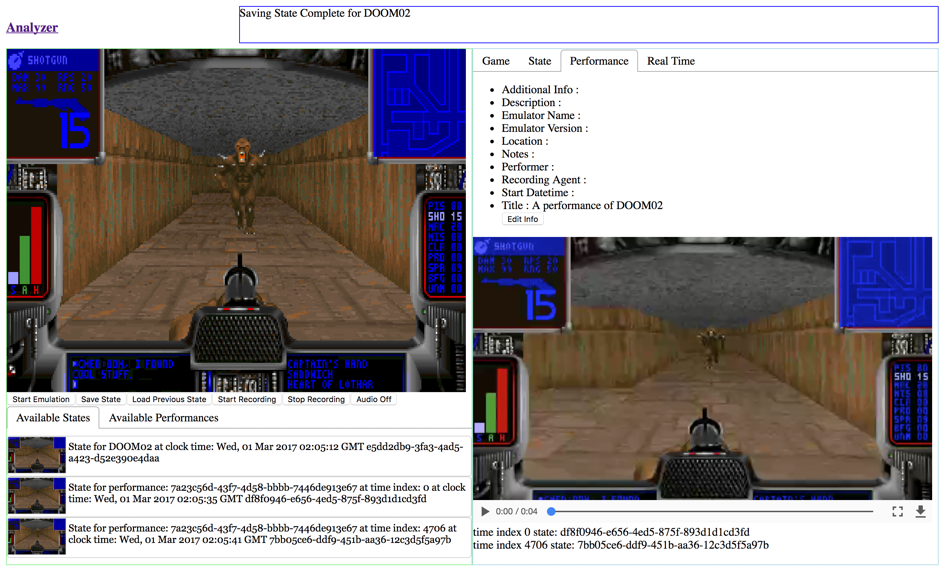

- A web application (the “app”) that enables the live emulation of ingested game data, the live recording of game play performances, and the live recording of computational game states.

| Citation Type | Supported File Types | Supported URI Sources |

| Game |

.NES ROM Format

.SMC ROM Format

Any directory containing a DOS compiled executable

.z64 ROM Format (partial)

|

MobyGames

Wikipedia

|

| Performance |

FM2 Replay Format

Generic Video Files

|

YouTube |

7.3 Web Application

- A basic listing page for the game and performance citations in the database.

- A full text search page that includes all citation records and game save state descriptions.

- A citation listing page that provides active links to previous save states and, for performances, the ability to create quick GIF animations based on a performance video.

- An indexer page that allows for examination of an emulated game, and the creation of game save states and video recordings.